PartyBot: An IoT Device for Party Games and Interactive Language Learning

This IoT device was developed in collaboration with five colleagues during my Master's studies as part of my coursework for an Internet of Things module. The goal of the project was to develop a novel IoT device that incorporated sensors, actuators, cloud computing and a web UI. The prototype we developed, PartyBot, was a device for party games and interactive language learning built using a system of ESP32 microcontrollers and the ESP Audio Development Framework.

System Overview

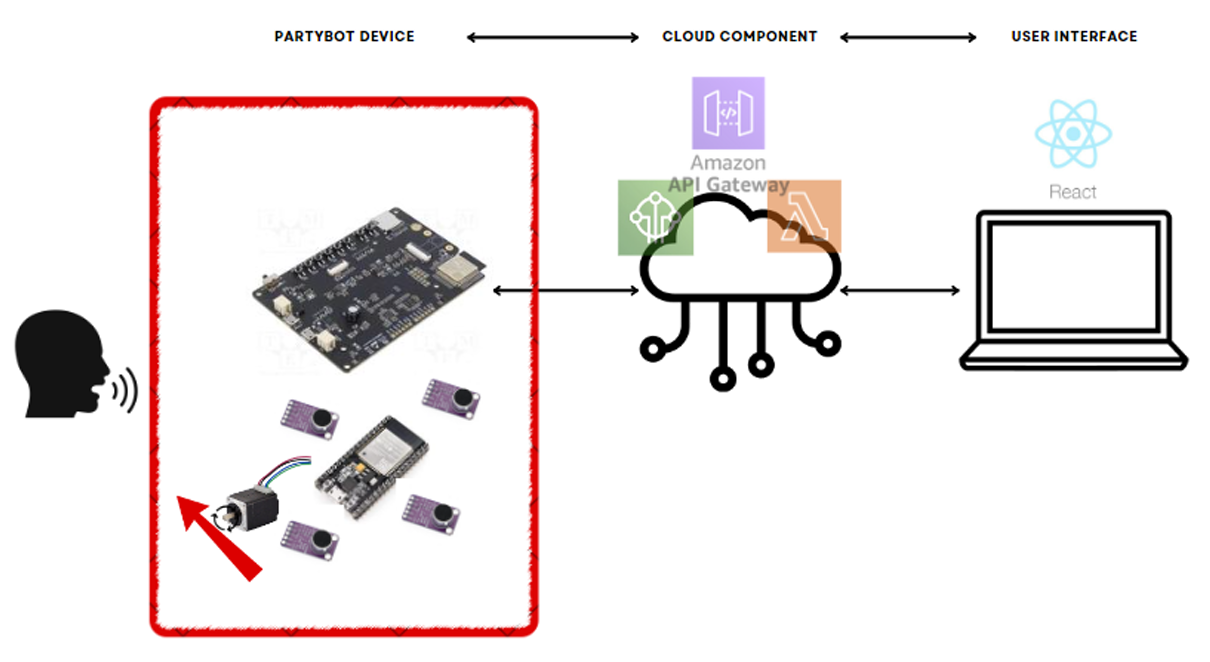

The concept of the game is simple: players define a set of banned words which they input to the device via a web UI, then the device listens passively to the players as they converse with one another. If the device detects that one of the players has said a banned word, it sounds an alarm and points an arrow at the player who said the word.

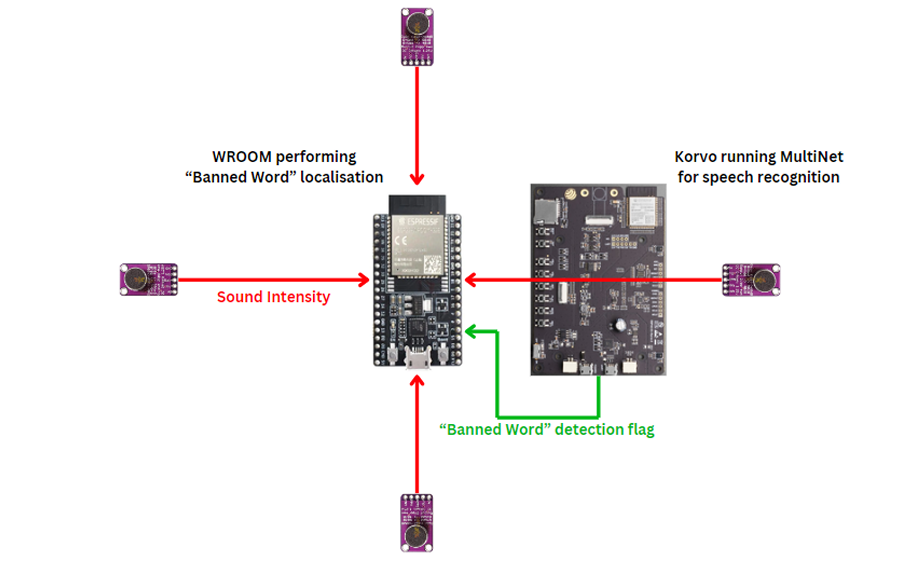

The primary hardware component is the ESP32-s3-Korvo-2 V3.1, a comprehensive multimedia development board. This board was selected due to its suitability for speech recognition applications. In addition to the speech recognition task, the Korvo also handled the communications with the cloud component of the system. The localisation of the source of each banned word was performed by a separate board, the ESP32-WROOM-32E. This board was connected to four external microphones, each covering a separate quadrant of the game space. The WROOM was also connected to a stepper motor, used to control the arrow, which it actuated to point in the direction of the source when a banned word was detected.

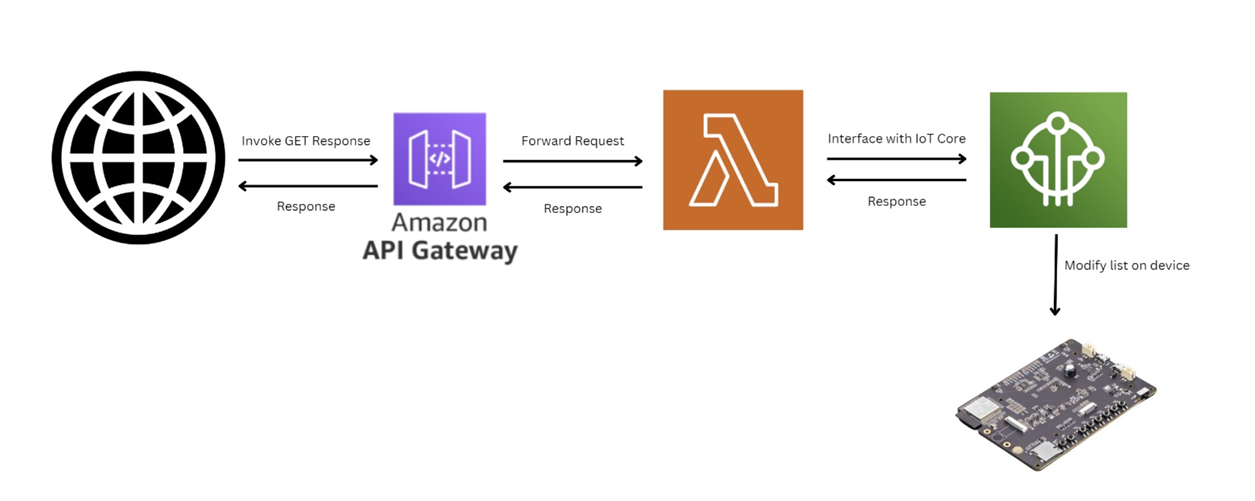

The hardware component is integrated with cloud computing and a web UI to create an intuitive, interactive user experience. The AWS cloud computing platform was selected for this purpose. Specifically, AWS IoTCore and AWS Lambda are used: the former for communication between the device and the cloud, and the latter for processing logic. The MQTT protocol was used for communications between the board and the cloud, due to its suitability for applications where low bandwidth usage and power consumption are of paramount importance. The user interface was implemented using the React JavaScript library, and interacted with the cloud backend through AWS API Gateway's WebSocket API.

Speech Recognition on an Embedded CPU

The most technical aspect of this project was the implementation of a speech recognition system on a low-power embedded CPU, particularly due to the need to dynamically update the speech recognition system via the cloud. Fortunately, the ESP32-s3-Korvo-2 board is built on the ESP32-S3 chip, which is the only ESP32 chip designed for SIMD (Single Input Multiple Data) instructions. This renders the chip compatible with both WakeNet and MultiNet - lightweight speech recognition models built on neural networks, specifically designed for low-power embedded CPUs.

For this project, MultiNet was used, with the list of command words for the network representing the banned words list. The system was designed so that this list could be changed dynamically via the cloud, and the model restarted with an updated list of command words.